Deepfake Phishing Simulations: The New Battleground for Cybersecurity Teams

Feb 02

Get in touch with our HRM Specialists

Introduction

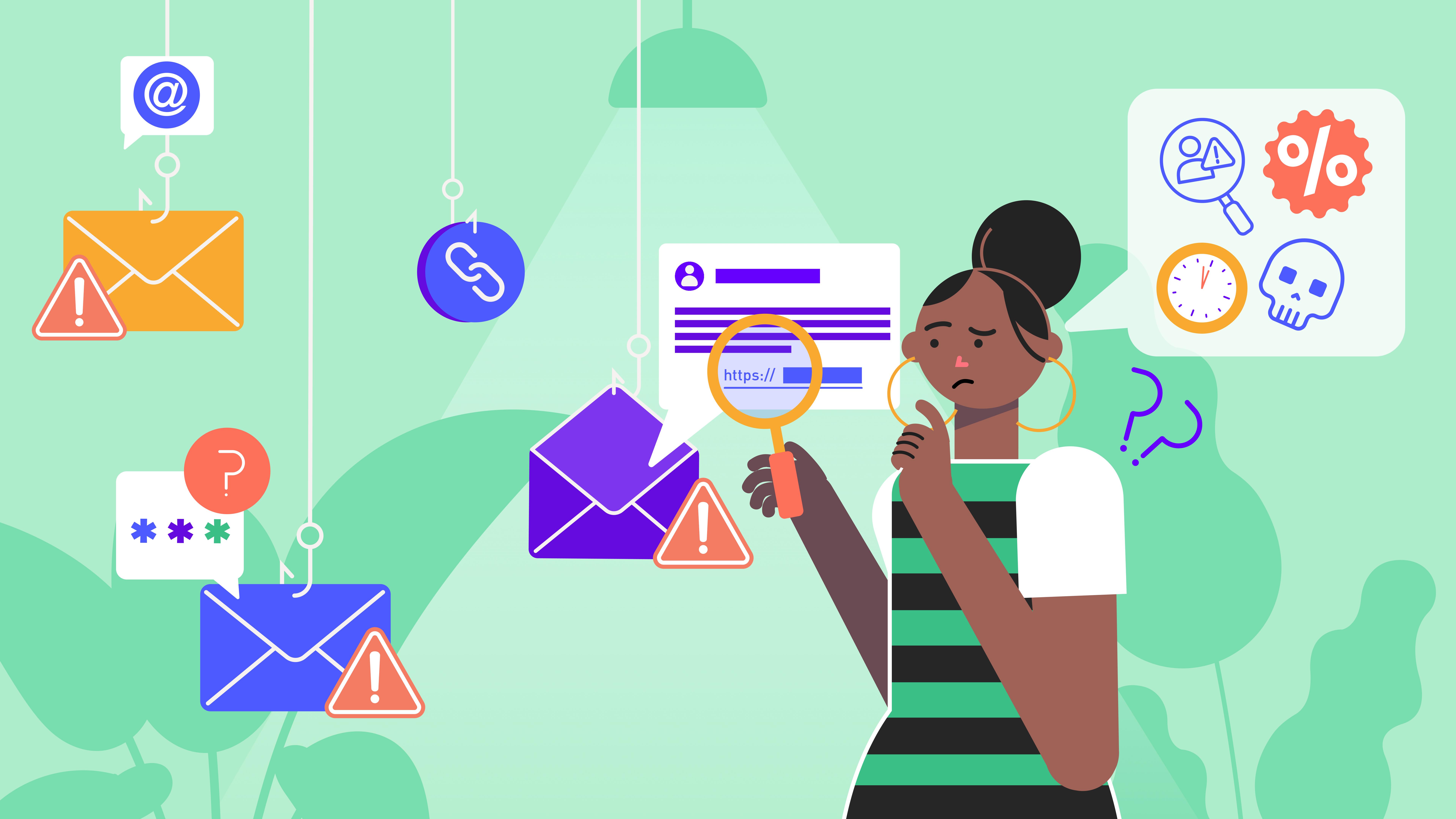

Deepfake phishing has already outgrown the protection offered by traditional phishing simulations and deepfake awareness training guide. These attacks are faster, more personalised, and increasingly multimodal, and they succeed not because of technical gaps, but because they exploit human decision-making under pressure.

Deepfakes are no longer a future risk or an edge case. They are actively being used to impersonate executives, authorise fraudulent payments, and capture credentials inside real organisations. In early 2025 alone, financial losses from deepfake-enabled fraud exceeded hundreds of millions of dollars, and the volume of AI-assisted phishing has risen sharply across enterprises. Yet most organisations are still preparing for yesterday’s threat model.

What this blog will help you understand

- Why deepfake phishing succeeds even when organisations run regular phishing simulations and awareness training

- How modern attacks use voice, video, and personal context to bypass email-only defences

- What real behavioural signals (not click rates) show whether employees are actually prepared

- How today’s deepfake phishing tools differ from traditional phishing platforms in what they measure and train

- Why human decision-making under pressure has become the primary security control in AI-driven attacks

What “human resilience” means in practice for enterprises facing deepfake and AI-enabled social engineering

1. Deepfake phishing is expanding and the data should make you fear

According to DeepStrike, the number of known deepfake files grew from around 500,000 in 2023 to more than 8 million by 2025. That kind of growth doesn’t happen accidentally. It signals automation, commoditisation, and attacker confidence. Over the same period, the report also states that fraud attempts linked to deepfakes increased bynearly 3,000% , with voice-based attacks (vishing) rising the fastest.

Keepnet Labs reported that deepfake-related fraud incidents recorded in early 2025 already exceeded the total number seen across all of 2024. In just the first quarter of 2025, incidents rose by 19% compared to the entire previous year.

The financial impact is no longer just a theory anymore. Resemble AI’s Q1 2025 Deepfake Incident Report shows over $200 million in financial losses in just three months, and those are only the cases organisations were willing to report publicly.

But the most revealing statistic explains why these attacks work so well.

Reports found that 82.6% of phishing emails now include AI-generated content. No play of random spams, there are more targeted messages enriched with intelligence scraped from LinkedIn profiles, corporate websites, executive bios, and even the signature blocks in your team's emails. Attackers aren't taking shots in the dark anymore. They're doing their homework.

This is why deepfake phishing feels different. It doesn’t look like an attack. It looks like work

2. Anatomy of a deepfake phishing attack: how trust is engineered step by step

Deepfake phishing doesn’t rely on a single message or moment. It’s a carefully curated process designed to seamlessly blend in with your everyday work.

Step 1: Context harvesting (but no hacking)

Attackers begin by mapping the organisation:

- Who reports to whom

- Who approves payments

- How leaders speak and write

- What “normal” looks like internally

They pull this from LinkedIn, company websites, YouTube videos of your CEO at conferences or podcasts, investor calls, basically anywhere your organisation leaves digital footprints. Everything becomes their training data.

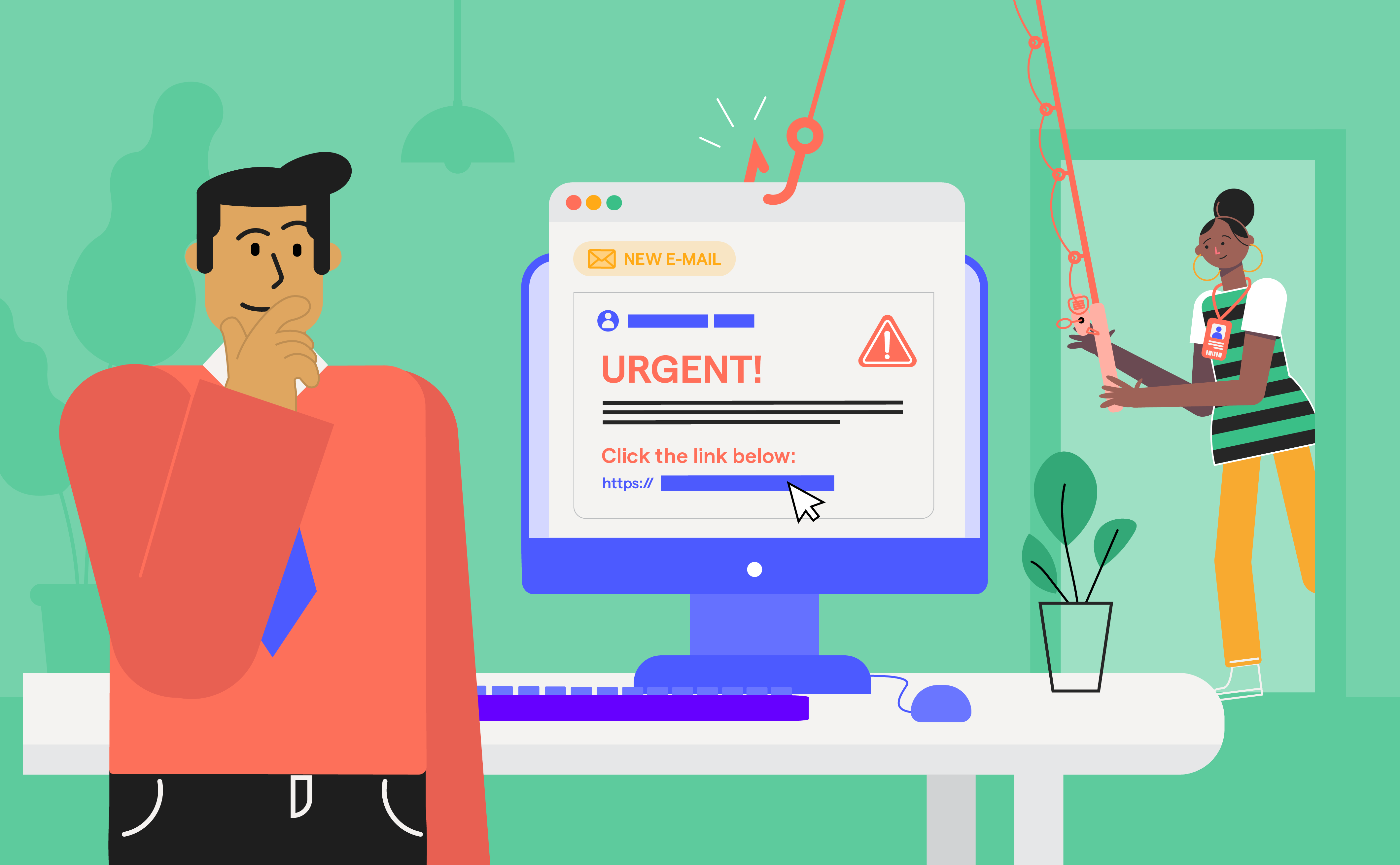

Step 2: A low-friction first touch

The first contact is intentionally boring:

- A short internal-sounding email

- A meeting invite

- A quick message on Teams or WhatsApp

No urgency yet with no suspicious links. Just enough interaction to register as familiar and to make the next contact feel like a continuation rather than an intrusion.

Step 3: Voice or video impersonation

Once attention is secured, things escalate, but very smoothly in a video or voice call.

Now you're hearing a voice that sounds exactly like your boss. Or you're on a video call where faces, expressions, and mannerisms are spot-on.

The language is deliberate:

“This is confidential.”

“I need this handled quickly.”

“I trust you with this.”

At this stage, most phishing instincts shut down because the interaction feels internal, credible, and time-sensitive.

Step 4: The request that doesn’t feel risky

The final ask is framed as routine:

- An urgent transfer

- A request to bypass a process

- Credentials needed “temporarily”

By the time this happens, the victim isn't thinking critically about risk. They're responding to what feels like a reasonable request from someone they trust.

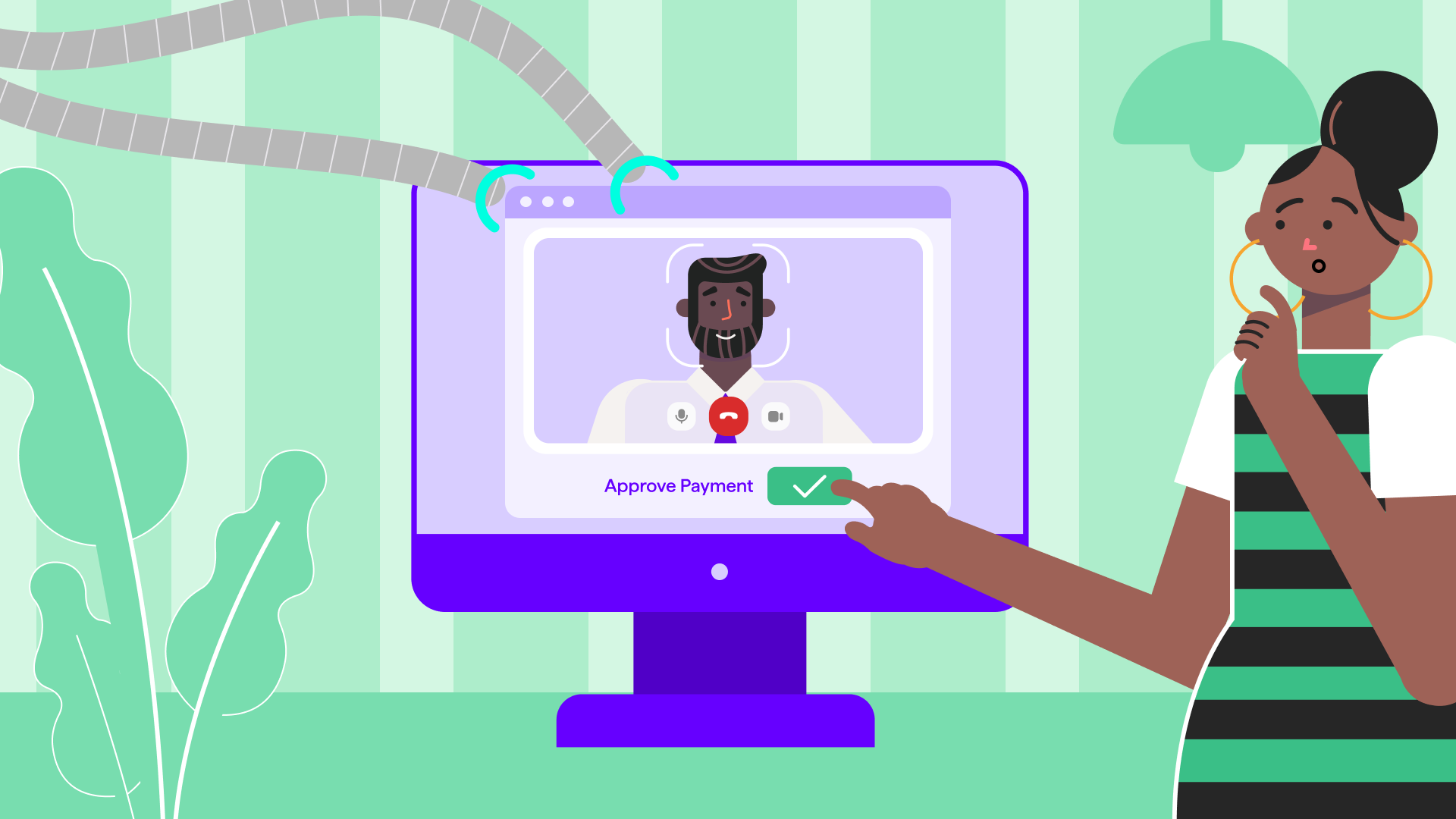

A case that changed how enterprises view deepfakes -

In early 2024, a finance employee at global engineering firm Arup joined what appeared to be a routine internal video call with senior leadership. The faces and voices on the call were familiar, the instructions clear and urgent. Acting on what seemed like legitimate authority, the employee approved a series of confidential transfers.

Only later did investigators discover the truth: the entire leadership team on the call had been AI-generated deepfakes. No systems were breached and no malware was used. By the time the fraud was uncovered, Arup had lost approximately HK$200 million, marking one of the most significant deepfake-enabled fraud cases reported to date.

A case of just flawless manipulation of trust, authority, and normal business procedure.

3. Why traditional deepfake phishing simulations no longer work

Most phishing simulations were designed for a threat landscape that no longer exists. Bad grammar or suspicious links or unknown senders.

That world doesn’t exist anymore.

Today, nearly 83% of phishing emails are AI-generated. They're produced faster, personalised better, and written more convincingly than anything a human scammer could manage at scale. But the real problem runs deeper than better writing.

Traditional simulations are fundamentally limited because they’re:

- Email-only

- Static

- Detached from real decision-making pressure

But deepfake phishing is multimodal. It unfolds across email, chat, voice, and video - often within minutes.

Email-only simulations don’t test:

- Authority bias

- Urgency

- Cross-channel confusion

- Real-time judgment

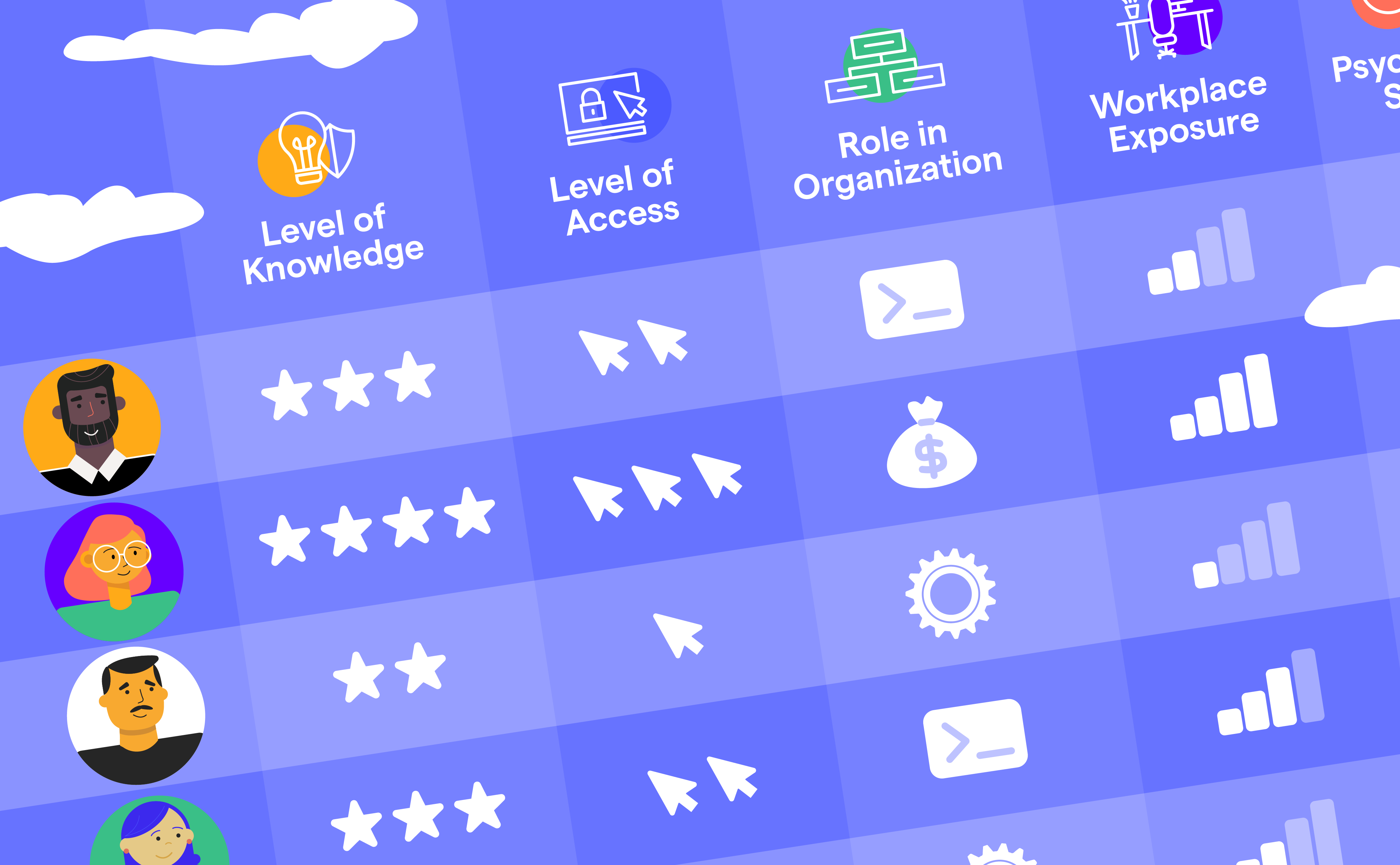

4. What behavioural KPIs actually matter in deepfake simulations

If you're still measuring success by click rate, you're tracking the wrong metric.

Deepfake attacks don't succeed because people fail to recognise suspicious keywords or spot fake sender addresses. They succeed because of how people make decisions when trust, authority, and urgency collide.

What actually reflects preparedness are behavioural signals like:

Reporting rate: The percentage of users who report a suspicious email instead of ignoring it or clicking. High reporting rates show proactive threat recognition and a security-aware workforce. Many mature programs aim for 80% or more reporting as a benchmark for strong awareness. (ISA Cybersecurity Inc.)

Open rate: The percentage of recipients who open a phishing simulation message. This helps distinguish between engagement and true risk behaviours - whether employees even see the lure before acting. (cira.ca)

Time-to-report / Mean Time to Report (MTTR): How long it takes from when a suspicious message lands to when it’s reported. Faster reporting means employees are thinking first, acting second, and that the SOC gets a head start on real threats. (Kymatio)

Repeat offender rate: The percentage of people who fall for multiple simulations over time. This identifies teams or individuals who need more targeted coaching rather than one-off training. (Keepnet Labs)

Dwell time: The time between receiving a phishing message and a user interacting with it (reporting, clicking, or dismissing). Shorter dwell times with correct reporting suggest better detection instincts and verification behaviour. (Brightside AI)

Verificationbehaviours (proxy metrics) : While not standard labels everywhere, many enterprise dashboards track elements related to verification, such as reporting before clicking and time-to-report, which capture whether users pause and double-check suspicious interactions, a key real-world behaviour. (Keepnet Labs)

5. The deepfake phishing vendor landscape

As deepfake phishing evolves beyond email into voice, video, and real-time impersonation, the vendor landscape starts to look uneven. Many platforms were built to simulate phishing messages, not to understand how humans behave when authority, urgency, and realism collide. That distinction matters because deepfake attacks succeed precisely in those moments.

| Vendor Name | Deepfake training/ awareness | Deepfake phishing simulation | Behavioural analytics / HRM | Core strengths |

|---|---|---|---|---|

| OutThink | ✅ | ❌ | ✅ | Human risk management, behaviour change, personalised risk, long-term resilience |

| Hoxhunt | ✅ | ✅ | ✅ | Highly realistic multi-stage deepfake attack chains, strong engagement |

| Proofpoint Phishing Simulations | ✅ | ❌ | ✅ | Email-based phishing templates and enterprise integration |

| Phished | ✅ | ❌ | ❌ | Email-based phishing templates and enterprise integration |

| Living Security | ✅ | ✅ | ✅ | Culture-driven awareness and behavioural insights |

| Abnormal Security (AI Phishing Coach) | ✅ | ❌ | ✅ | AI-assisted email coaching |

| Breacher.ai | ✅ | ✅ | ❌ | Bespoke, red-team style multi-channel simulations |

| Adaptive Security | ✅ | ✅ | ❌ | Multi-channel simulations with high realism |

| Revel8 | ✅ | ✅ | ❌ | Custom executive-level social engineering simulations |

| OSS / DIY (Phishing Frenzy + voice sim) | ❌ | ✅ | ❌ | Full control and customisation (high effort, low scale) |

a. OutThink

OutThink approaches deepfake phishing from a human risk management (HRM) lens rather than pure attack simulation. Instead of recreating deepfake attacks, it focuses on how and why people comply under authority, urgency, and context, which is exactly what deepfakes exploit. This makes it especially effective against fast-evolving, real-world AI impersonation attacks.

Key highlights

- Behaviour change over technical simulation

- Tracks hesitation, validation, reporting, and exit behaviour

- Personalised risk profiles for each employee

- Prepares employees to detect real deepfake threats, not just pass tests

- Builds long-term resilience against AI-driven social engineering

b. Hoxhunt

Hoxhunt is one of the few platforms offering bespoke, realisticdeepfake attack simulations . Its strength lies in chaining multiple stages together to mimic how real deepfake phishing campaigns unfold across channels.

Key highlights

- Multi-channel attack chains (email → fake Teams/Meet/Zoom calls)

- Cloned executive voices and avatars

- Behaviour KPIs like reporting rate, drop-off, and final failure

- Micro-training and follow-ups for high-risk users

c. Proofpoint Phishing Simulations

Proofpoint’s phishing simulations are designed primarily for email-based phishing awareness and integrate tightly with its wider email security ecosystem. While effective for traditional phishing, it is less aligned with deepfake impersonation attacks.

Key highlights

- Email-only phishing simulations

- Template-driven campaigns

- Strong enterprise integration

- Limited coverage for voice, video, or impersonation-based deepfakes

d. Phished

Phished focuses on helping employees recognise phishing patterns through email-only simulations. Its approach is awareness-centric and best suited for baseline phishing education.

Key highlights

- Email template-based simulations

- Pattern recognition training

- Focus on awareness, not behaviour under pressure

- Not designed for multi-stage or deepfake attacks

e. Living Security

Living Security takes a culture-first approach to security awareness, aiming to influence employee behaviour over time. While it provides behavioural insights, its simulations rely more on templates than bespoke deepfake scenarios.

Key highlights

- Behaviour and culture-driven awareness programs

- Workforce risk insights

- Template-based simulations

- Limited deepfake-specific attack modelling

f. Abnormal Security (AI Phishing Coach)

Abnormal’s AI Phishing Coach focuses on coaching users after phishing exposure, layered on top of its email detection capabilities. It remains largely email-centric in scope.

Key highlights

- AI-guided post-incident coaching

- Email-focused phishing defence

- Strong detection integration

- Does not simulate or train for voice/video deepfakes

g. Breacher.ai

Breacher.ai operates more like a red-team social engineering platform, delivering highly customised, multi-channel simulations. It excels at realism but is primarily a testing tool rather than a behaviour-change platform.

Key highlights

- Bespoke, multi-channel simulations

- Can include deepfake voice impersonation

- High realism and custom scenarios

- Focused on testing, not long-term HRM

h. Adaptive Security

Adaptive Security offers flexible, multi-channel phishing simulations designed to closely mirror real attacks. Its strength lies in realism rather than sustained behavioural analytics.

Key highlights

- Multi-channel phishing simulations

- Mix of bespoke and template scenarios

- High attack realism

- Behaviour measured per campaign, not over time

i. Revel8

Revel8 specialises in highly targeted social engineering simulations, often aimed at executives or high-risk roles. These simulations are realistic but not designed for organisation-wide behavioural modelling.

Key highlights

- Bespoke, executive-level simulations

- Multi-channel impersonation scenarios

- Strong realism for targeted testing

- Limited focus on continuous workforce behaviour change

j. OSS / DIY (Phishing Frenzy + voice simulation)

DIY and open-source approaches give teams maximum control but require significant internal effort. They are best suited for organisations with strong in-house security engineering capabilities.

Key highlights

- Fully customisable simulations

- Can include voice or call-based phishing

- High setup and maintenance effort

- Minimal behavioural analytics and scalability

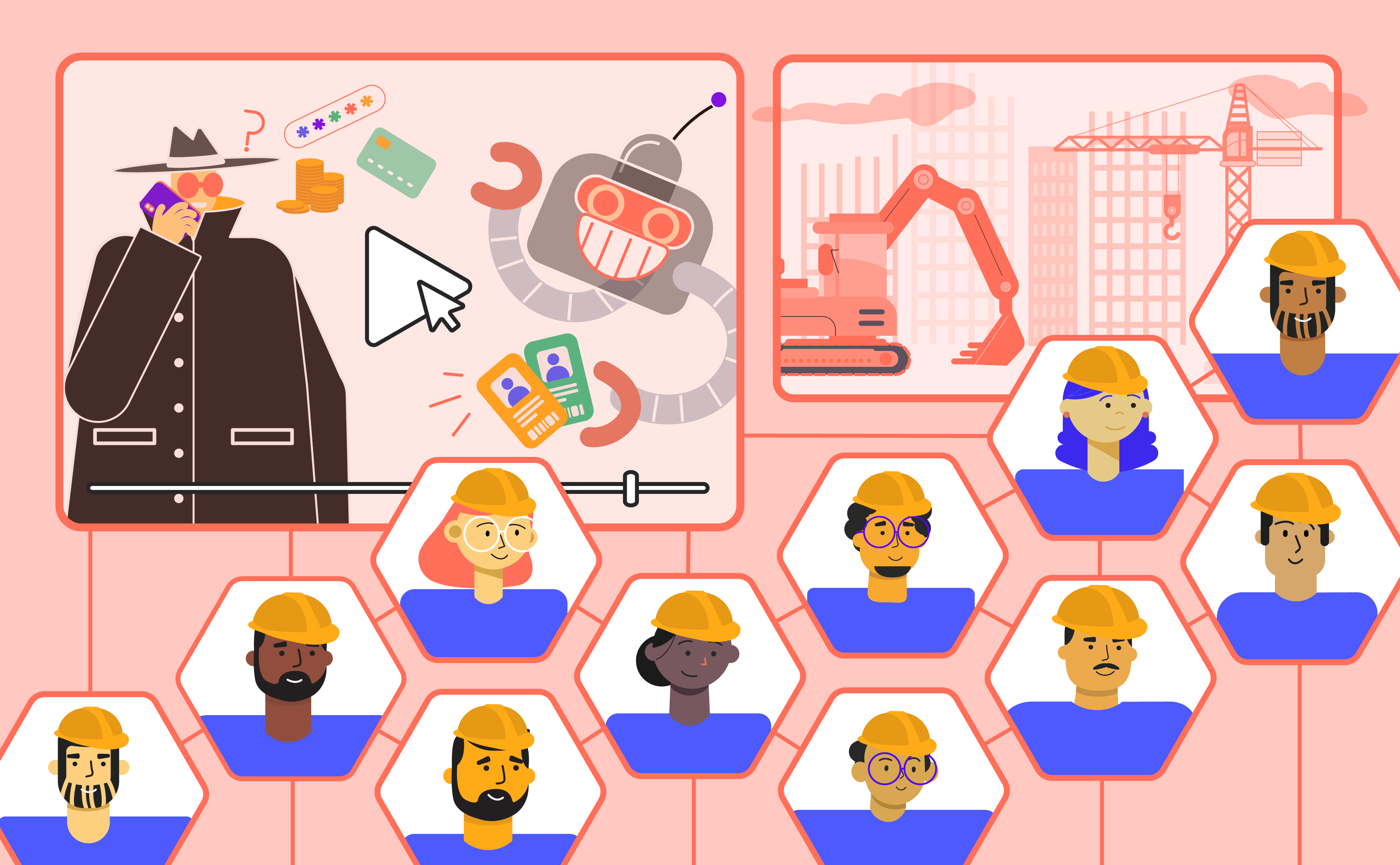

6. The human and technical blind spots deepfakes exploit

Deepfakes aren't just fooling people. They're fooling the machines we built to catch them.

Research published on ScienceDirect and arXiv shows that even advanced detection systems struggle with real-world audio and video deepfakes, especially outside curated datasets. Detection accuracy drops sharply in “in-the-wild” conditions.

Large surveys of deepfake detection research highlight another challenge: multimodal deepfakes (audio + video) are significantly harder to detect than single-mode fakes. When attackers synchronise a cloned voice with a convincing video, detection tools fail.

The result is a bunch of dangerous gaps:

- Technology lags

- Humans over-trust realism

- Speed beats verification

7. What enterprise leaders should look for in 2026

By 2026, choosing a deepfake phishing solution won't be about comparing feature lists or ticking compliance boxes. Those signals belong to an older threat model, one where attacks were obvious and defenses were straightforward. The real question leaders need to ask is whether a platform can actually prepare people to make the right decision when pressure, authority, and realism are all colliding at once.

Modern deepfake attacks don't arrive as suspicious emails with typos and weird links you can spot from a mile away. They show up as familiar voices, urgent video calls, and what feel like "internal" requests that demand immediate action. Your brain isn't in threat-detection mode. You're just trying to help your boss before their next meeting.

That's why organisations need tools that go beyond email and actually test how employees behave in moments of uncertainty and not just whether they can recognise phishing patterns when they're sitting calmly at a training module.

The strongest platforms get this. They measure behaviour under pressure. They track how responses change over time, not just during phishing campaigns. They adapt training to individual risk profiles because your finance team faces different threats than your marketing team.

Most importantly, they help employees develop the instinct to pause, validate, and exit suspicious situations, even when everything in the interaction feels completely legitimate. Even when saying "let me verify this first" feels awkward.

If a tool can't prepare people for voice and video deception, or can't actually show you how human risk is decreasing (not just click rates improving), it isn't solving today's problem.

The same logic applies to SMS-based attacks — our guide on preventing AI-driven smishing at the human layer explores what effective defences actually look like.

8. Wrap with thought: cybersecurity’s hardest problem is still human

Deepfake phishing isn't a technology problem that happens to involve people. It's a human problem that technology hasweaponised . Attackers aren't breaking into systems anymore, they're breaking into trust. They're exploiting our instinct to help, to comply, to not question someone who sounds like our boss asking for a quick favour.

The future of defence isn’t just smarter tools or louder alarms. It’s developing human judgment, which is the ability to sense when something is off, slow down, and confirm before acting, even when a familiar voice is demanding immediate action.

OutThink's HRM-centric approach recognises this reality. The goal isn't catching every deepfake before it lands, you'll lose that arms race eventually. It's about cultivating people who can sense when the rhythm of a request doesn't quite match the music, even when everything looks and sounds perfect.

Because when AI can fake anything, human judgment isn't just your last line of defense. It's your best one.