Beyond Detection: The New Model for Deepfake Awareness Training

Feb 09

Get in touch with our HRM Specialists

Introduction

You might join a call that looks like routine internal work, see some familiar faces and hear their familiar voices. The urgency they create is believable, and their requests fit neatly into your workflow - maybe to share a document or approve payments. Nothing throughout the call feels suspicious.

That’s precisely how deepfake attacks succeed.

Deepfake attacks are designed to blend into normal business interactions. The trick begins with normality, passing as an ordinary, trusted interaction without friction or resistance.

The assumption that people can visually or audibly identify deepfake impersonation presupposes the idea that something will look or sound “off.” That assumption no longer holds. A 2024 research shows that people consistently overestimate their ability to tell real from fake, especially when audio and video quality are high.

Pressure makes this even worse. Under urgent conditions, people stop carefully scanning and instead rely on surface cues like faces, voices, and perceived authority. Another study found that high time pressure reduced anomaly-detection sensitivity by around 15-20%, with participants skimming more and verifying less!

Deepfake risk is not a detection problem, rather it is a decision problem. Modern deepfakes collapse the assumptions behind traditional awareness training, which relies on visible errors, conscious scrutiny, and time to evaluate. When identity, context, and urgency align, people act the way work has trained them to act: efficiently and trustingly. This is why improving realism alone (in the context of deepfake SAT) does not improve readiness.

Effective deepfake awareness training must focus on behaviour under pressure, verification habits inside real workflows, and systems that can surface risk at the moment decisions are made. The sections that follow examine why legacy training fails, how training design shapes behaviour, and what true deepfake readiness requires in practice.

Why traditional awareness training collapses against deepfakes

As you must know by now that deepfakes defeat awareness by changing where deception lives - they shift deception from the visible cues and blend more into your routine work and decision methods. Traditional security awareness training is built on the idea that attackers introduce anomalies and that users can learn to notice them. Deepfake attacks succeed by removing anomalies altogether and exploiting trust at both a perceptual and procedural level.

At an operational level, this breakdown can be traced to the following mechanisms:

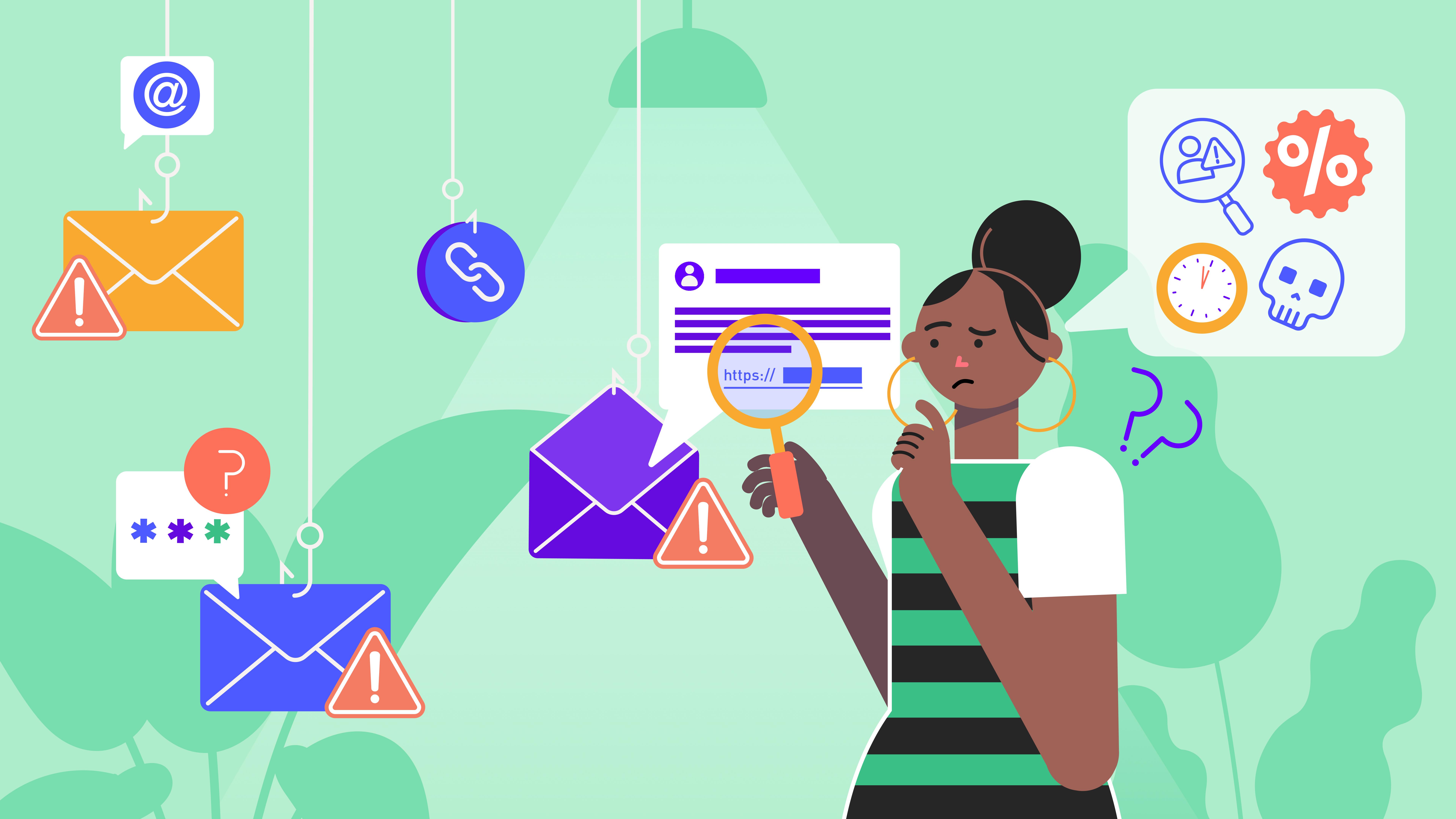

- Signal-level identity spoofing: Modern deepfakes replicate facial movements, lip-syncing, and vocal timbre so accurately that users cannot rely on visual or audio cue anymore.

- Workflow-conformant embedding: Requests are placed inside normal business processes like approvals, payroll changes, or executive escalations. The interaction looks operational rather than adversarial, which means the brain treats it as routine work instead of a threat.

- Authority simulation through likeness cloning reproduces leadership cues that trigger automatic compliance before analytical reasoning kicks in. When the request sounds like it is coming from a CEO or finance head, people respond socially first and critically later.

- Temporal pressure engineering deliberately adds urgency to push users out of evaluation mode and into execution mode. This is not new, but deepfakes make urgency far more convincing because the messenger itself looks legitimate.

- Multimodal consistency reinforcement aligns face, voice, language, and situational context so the brain treats the interaction as system-validated rather than suspicious.

- Automation bias activation plays a role. Because everything appears technically and socially correct, users defer to perceived legitimacy instead of questioning authenticity.

The implication is uncomfortable: training that teaches people to hunt for red flags assumes red flags will exist.

A 2024 meta-analysis of 69 empirical studies found that while training increases knowledge and awareness, its impact on actual behaviour, meaning resistance to social engineering, is limited. Knowing about threats does not reliably translate into safer actions.

Another recent 2026 study comparing humans and machines found AI detectors reaching 97% accuracy while humans hovered close to 50/50, influenced heavily by truth bias, the tendency to assume authenticity unless proven otherwise. The study concluded that people are poorly equipped to detect deepfakes in realistic conditions and that training must build behavioural instincts rather than confidence alone.

Why training design matters more than threat realism

What matters most is not how realistic the fake looks, but how the training shapes behaviour when something feels real. Recent research reveals that human ability to detect deepfakes is often no better than chance, and that people tend to overestimate their detection skills, even when media quality is high. A 2024 systematic review shows wide variability in human deepfake detection performance with mixed results from intervention strategies. Another study comparing humans and AI models found that people perform only slightly better than random guessing and frequently overestimate their accuracy. Experiments on speech deepfakes under realistic conditions further show that lack of prior warning significantly reduces detection of sensitivity.

These findings show that spotting a deepfake is often unreliable, even for trained eyes. What really counts is training that shapes how people act when something seems suspicious and not just how real the ‘deepfake’ looks. Here are some key design shifts that matter:

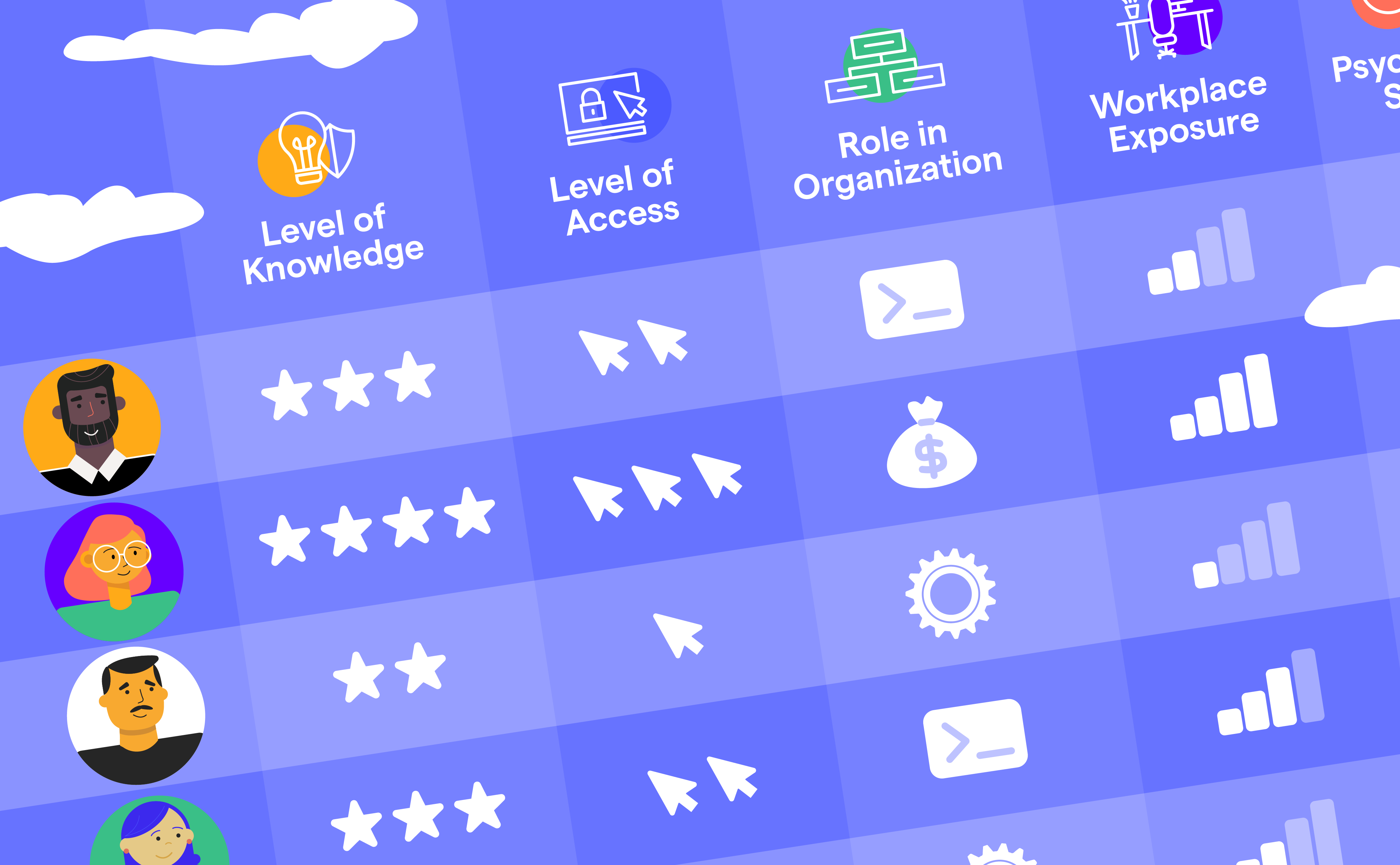

- Deepfake technology fundamentals: Employees need to understand what deepfakes actually are and how they are created. AI can generate synthetic audio and video using deep learning, which is why visual or voice confidence is no longer a reliable signal for authenticity.

- Multimodal simulation exercises: Training should include realistic simulations using fake audio, fake video, and combined media. Practising in these conditions builds experiential memory rather than abstract awareness, because recognition is learned through exposure rather than explanation.

- Role-specificdeepfake scenarios: Different roles face different risks. Finance teams are more likely to receive fake CFO calls, while HR may see fake onboarding videos or candidate messages. Training that mirrors real job functions increases relevance and improves transfer to actual workplace behaviour.

- Reporting and verification protocols: Employees must be taught exactly how to respond when a request feels urgent but unusual. This includes secondary-channel confirmation, structured escalation paths, and clear checklists so that verification becomes a habit rather than a guess.

- Continuous refreshers for evolving threats: Deepfake technology evolves rapidly, so training cannot be a once-a-year exercise. Ongoing updates and periodic refreshers are needed to maintain readiness as attack techniques change.

- Feedback loops andbehavioural measurement: Organisations should measure real behavioural signals, such as reporting rates and verification actions, instead of relying only on quiz scores. These feedback loops show whether training is changing how people act under pressure, not just what they remember.

Deepfake readiness is an architecture problem and not a feature checklist

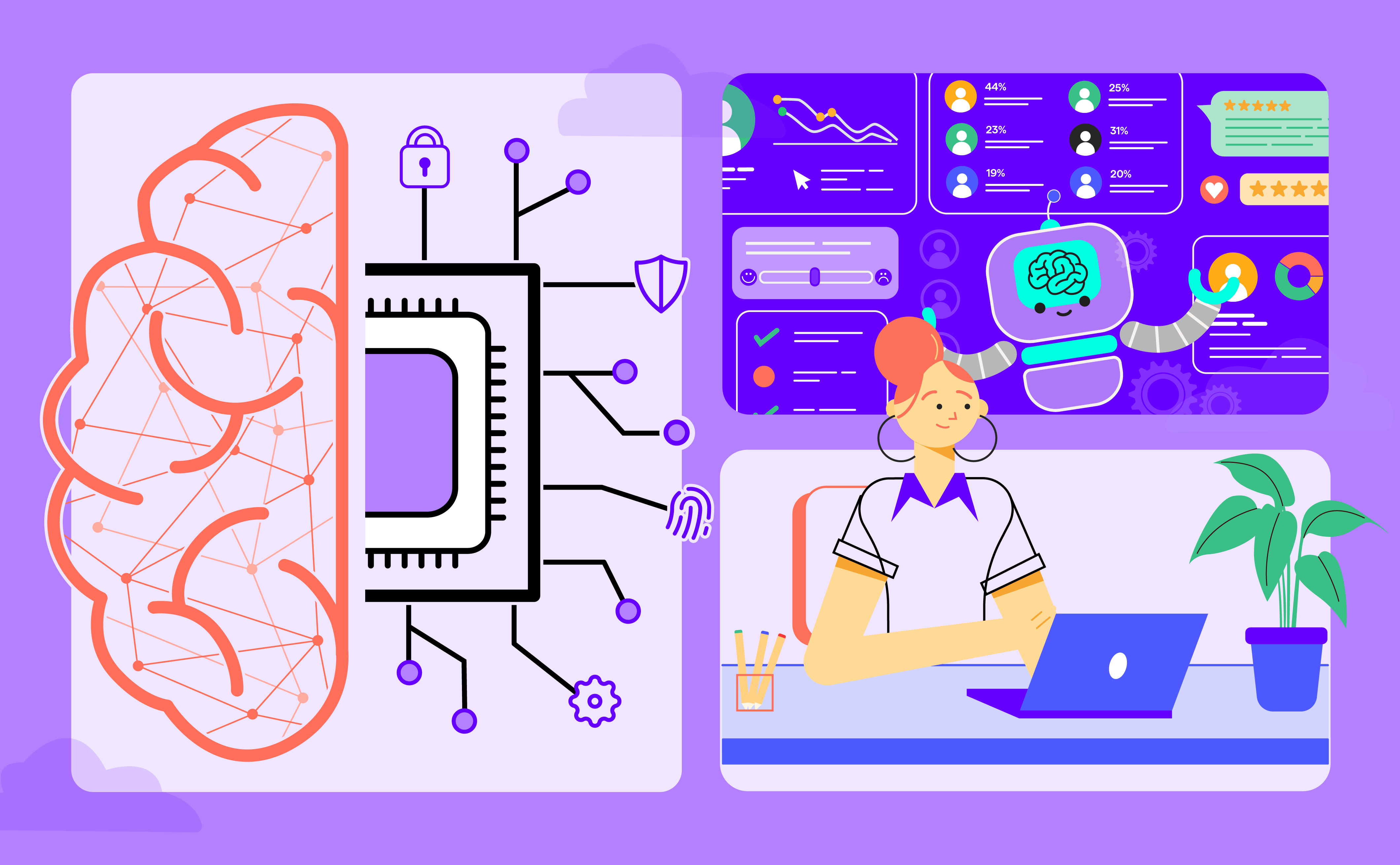

If deepfake attacks succeed at the moment a human makes a decision, then the real question is not which platform has the best deepfake video or the largest training library. The more important question is: which systems can see risky behaviour as it happens and which ones can intervene when it matters.

Most traditional security awareness platforms were designed for a different threat model: simulated phishing, scheduled training, and post-event reporting. Deepfakes challenge that model because they operate in live workflows, through trusted identities, and under time pressure. That means readiness is determined less by content and more by architecture, specifically whether a platform can integrate with real tools, react in real time, and model human risk beyond quiz scores.

The comparison below reframes deepfake preparedness through this architectural lens.

OutThink vs Competitor Benchmarking (2026)

| Vendor | SOC/Live Tool Integration | Real-Time Point-of-Risk Nudges | Predictive Risk Index (HRI) | Non-Email Workforce Support |

|---|---|---|---|---|

| OutThink | ✅ | ✅ | ✅ | ✅ |

| KnowBe4 | ❌ | ❌ | ◐ | ❌ |

| Proofpoint | ◐ | ◐ | ◐ | ❌ |

| Hoxhunt | ❌ | ✅ | ◐ | ❌ |

| Adaptive Security | ❌ | ❌ | ✅ | ❌ |

| Keepnet Labs | ❌ | ❌ | ◐ | ❌ |

| AwareGO | ❌ | ❌ | ❌ | ❌ |

| Breacher.ai | ❌ | ❌ | ❌ | ❌ |

OutThink

OutThink treats deepfakes as a human decision-making problem rather than a media-detection problem. Instead of asking employees to “spot” synthetic faces or voices, its training is built around how people respond to authority, urgency, and normal-looking requests. This makes it structurally better aligned to deepfake attacks, which succeed by blending into everyday work rather than by looking technically flawed. OutThink’s behaviour-first design reframes deepfake awareness as the ability to interrupt risky actions and verify before execution, even when the interaction feels completely legitimate.

Key points:

- Behavioural training focused on judgment and interruption during deepfake-style impersonation

- Scenarios built around authority spoofing and urgent executive requests

- Adaptive learning based on how users respond to realistic impersonation attempts

- Emphasis on verification behaviour rather than visual or audio detection

Adaptive Security

Adaptive Security approaches deepfakes through multichannel attack simulation, exposing employees to voice, conversational, and blended impersonation attempts that mirror real-world social engineering flows.

Key points:

- Voice and conversational deepfake-style phishing simulations

- Cross-channel impersonation scenarios (email, SMS, calls)

- Micro-lessons tied to impersonation failures

- Focus on realism of attack delivery

KnowBe4

KnowBe4 integrates deepfake awareness into its broader training ecosystem and allows organisations to create custom synthetic media scenarios using familiar internal figures to demonstrate impersonation risk.

Key points:

- Deepfake awareness modules within large training library

- Custom synthetic video scenarios using leadership likeness

- Impersonation-based social engineering exercises

- Content-driven exposure to deepfake risks

Hoxhunt

Hoxhunt embeds deepfake-style impersonation into its adaptive, gamified training model, reinforcing safe responses to AI-enabled social engineering over time.

Key points:

- AI-powered impersonation and deepfake-style simulations

- Adaptive difficulty based on user behaviour

- Gamified reinforcement of safe response patterns

- Emphasis on repeated exposure to impersonation tactics

Proofpoint Security Awareness

Proofpoint incorporates deepfake risk into phishing and impersonation training informed by real-world threat intelligence from its security ecosystem.

Key points:

- Impersonation scenarios informed by deepfake threat trends

- Training aligned with real attack data

- Integration with fraud and identity-based attack patterns

- Contextualised deepfake awareness within phishing training

Breacher.ai

Breacher.ai focuses on generating synthetic voice and video for social engineering simulations, allowing employees to experience realistic AI-generated impersonation attempts.

Key points:

- AI-generated deepfake voice and video simulations

- Impersonation-focused social engineering exercises

- Micro-training linked to synthetic media attacks

- Behavioural response analysis for deepfake scenarios

Keepnet Labs

Keepnet Labs combines deepfake-enabled simulations with behavioural analytics to track how employees respond to voice and video impersonation attempts.

Key points :

- Deepfake voice and video attack simulations

- Real-time feedback after impersonation attempts

- Behavioural metrics tied to deepfake response

- Adaptive training based on impersonation outcomes

AwareGO

AwareGO addresses deepfakes primarily through narrative-driven awareness content that explains manipulation and trust exploitation rather than technical spoofing.

Key points :

- Story-based training on impersonation and trust abuse

- Psychological framing of deepfake manipulation

- Limited technical deepfake simulation

- Awareness-led rather than simulation-led

CyberTraining365

CyberTraining365 covers deepfake risks within its broader phishing and fraud training catalogue, with limited focus on AI-driven impersonation mechanics.

Key points :

- General awareness of deepfake-enabled scams

- Email and message-based impersonation coverage

- Limited synthetic media simulation

- Primarily content-based training

The real goal of deepfake awareness training going forward

For years, awareness programmes have trained employees to look for clues, scan for mistakes, and decide whether something is real or fake. That model assumes detection is the main skill and by now you should know that deepfakes break that assumption. When faces, voices, language, and context all line up, there is often nothing obvious left to detect.

What training must build instead is behavioural resilience: the ability to interrupt a moment, slow down a decision, and choose verification over execution when something feels urgent or authoritative. One must build a skill of recognition and knowing when to stop and question.

This is where approaches like OutThink’s behaviour-first model matter. Rather than asking employees to become human deepfake detectors, the focus shifts to how people make decisions under pressure, how they respond to authority cues, and whether they know how to safely stop and verify before acting.

The outcome is not perfect detection, but reliable judgment.

This is part of a broader movement toward human-centric cybersecurity, where secure behaviour replaces the perimeter as the primary line of defence.