Designing Human-Centric Cybersecurity

Dec 01

Get in touch with our HRM Specialists

Introduction

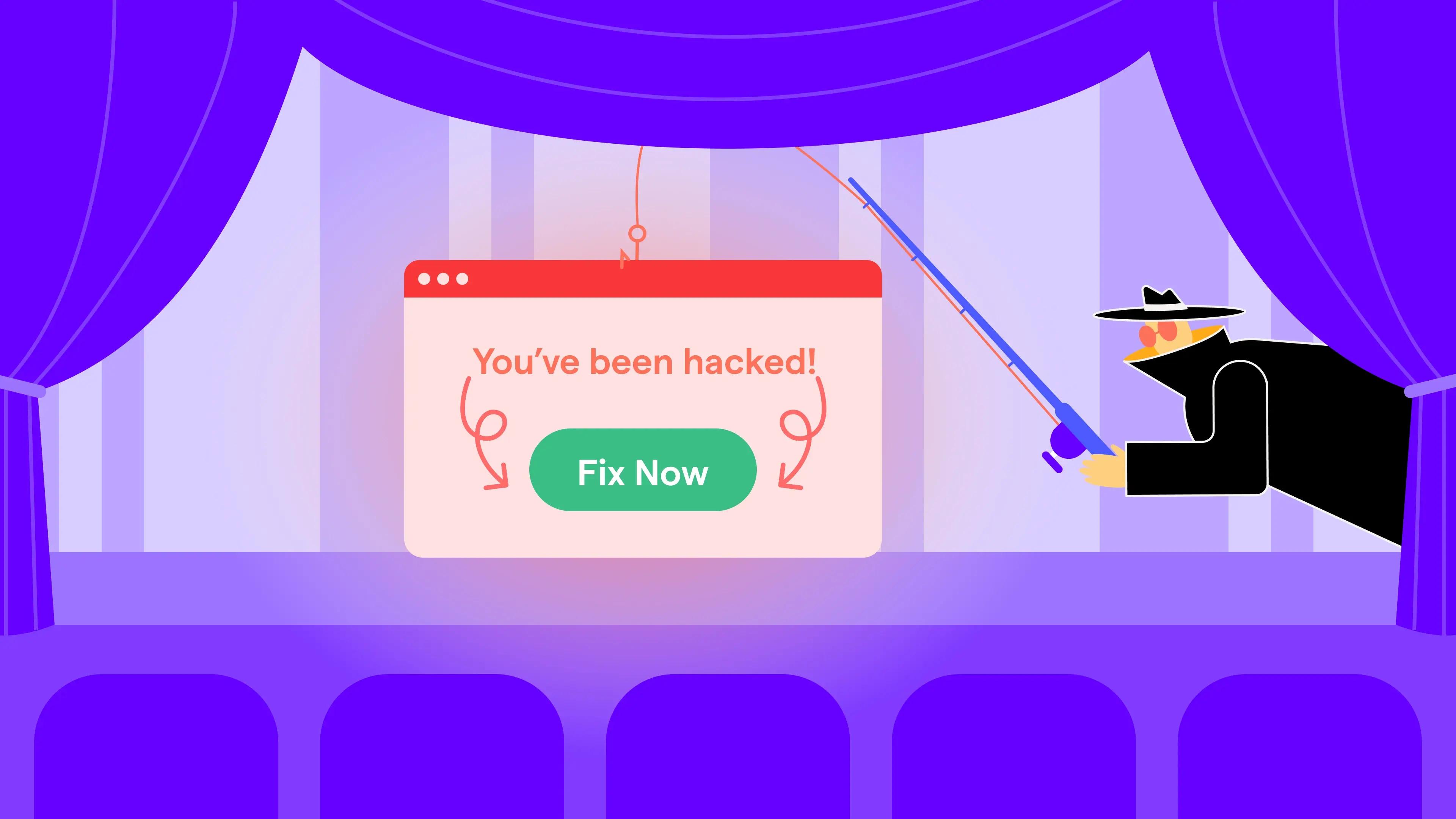

Building on the human factor, the next step is to design systems and policies that align with how people actually behave. This is where behavioral economics, persuasion theory, and practical design principles converge with security strategy.

Password Complexity and Behavioral Nudges

Take password policies as an example. Excessive complexity - too many characters, symbols, or rotation rules - can backfire. Users recycle old passwords or write them down, defeating the purpose.

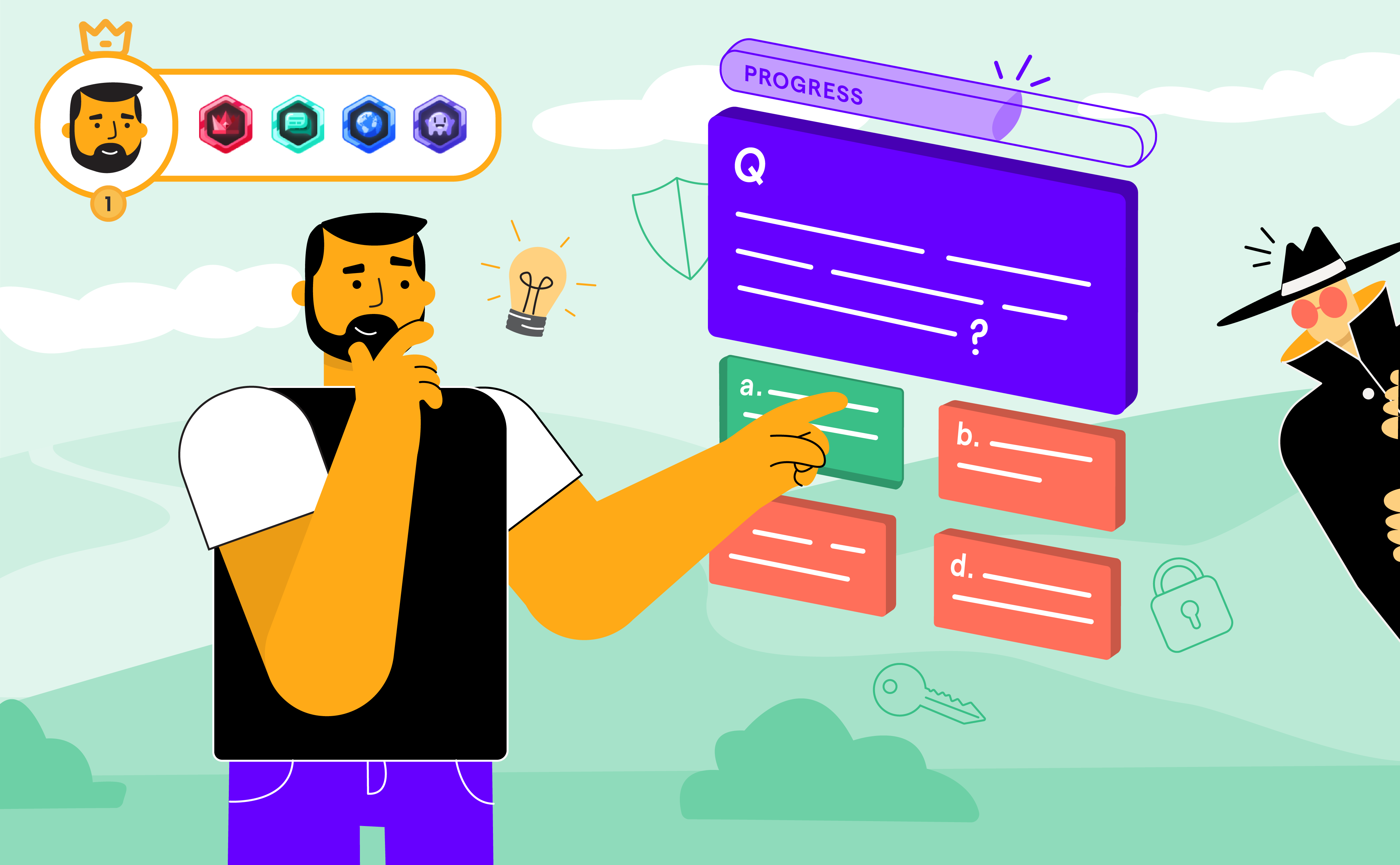

A more effective approach is to enforce a solid baseline while using behavioral nudges to encourage voluntary improvement. For instance, subtle prompts during password setup can remind users of best practices or show comparative strength scores. Over time, such nudges can measurably increase overall password quality - even if only 10% of users choose stronger credentials, that represents a collective reduction in risk.

Broader Human-Risk Applications

These same principles apply across many information security practices:

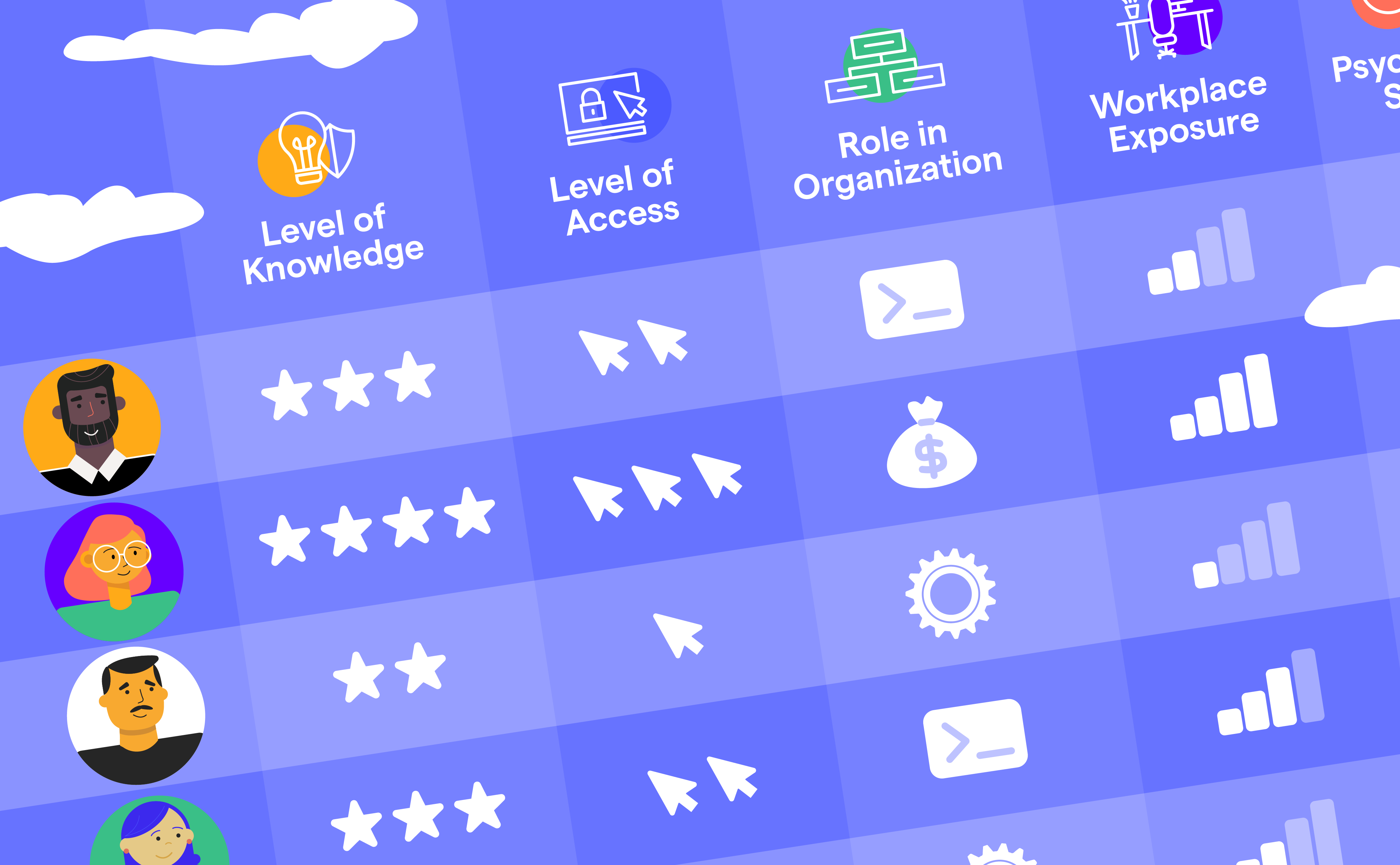

- User Access and Privilege Reviews: Managers can be prompted to challenge unnecessary permissions or request simplified, role-based access groupings they can understand.

- Device Update Compliance: Delays in installing patches accumulate into thousands of collective “vulnerable days.” Persuasive design and better social engineering can encourage faster responses.

- Disaster Recovery Participation: Framing DR and Business Continuity testing as engaging, collaborative exercises - not chores - improves turnout and realism.

- HR Off-boarding Triggers: Timely deactivation of departing employees’ access often depends on managerial discipline. Automated reminders and accountability mechanisms can close this gap.

- User Acceptance Testing: As agile development increases change frequency, effective persuasion and gamification can motivate users to complete meaningful UAT rather than superficial log-ins.

Across all these examples, the common thread is behavioral activation - creating conditions where doing the secure thing is easier, quicker, or more rewarding than not doing it.

Cultural Accountability and Decision-Making

In practice, many controls still depend on humans. Even automated processes often include a “human in the loop” making uncertain decisions with limited information. Corporate culture sometimes discourages individual accountability, favoring committee consensus. While well-intentioned, this can delay responses and increase risk exposure.

Changing culture is hard, but not impossible. Borrowing proven methods from social engineering, marketing, and consumer psychology can help shift behavior without heavy-handed enforcement. These approaches are often cheaper and more sustainable than new tools that add complexity but not value.

Conclusion

In cybersecurity, we already possess the technology - what we lack is alignment, empathy, and design tuned to human nature. By augmenting technical controls with behavioral insight, organizations can build resilience that is measurable, cost-effective, and human-first.

True progress lies not in more tools, but in smarter, psychologically aware systems that turn people - once considered the weakest link - into the strongest line of defense.