Beyond Tools - The Human Factor in Cybersecurity

Dec 01

Get in touch with our HRM Specialists

Introduction

Accepting that no organization can operate sustainably in an ideal state, we must find pragmatic ways to reduce risk despite imperfect conditions. In cybersecurity, the most effective approach is to recognize that the human factor remains central not only as a point of vulnerability but as a key driver of resilience.

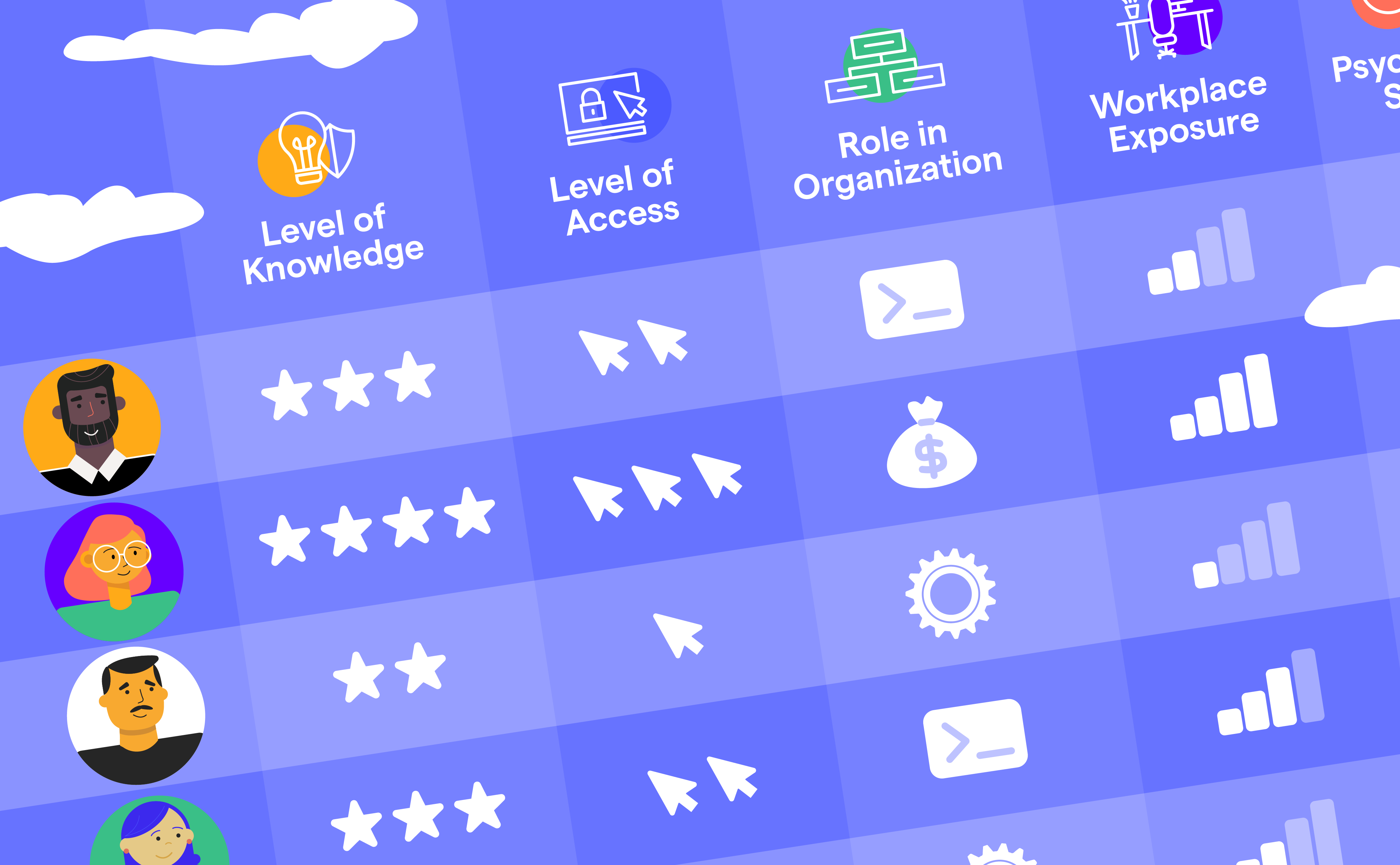

Humancentric thinking is already embedded in most frameworks through training, awareness campaigns, and exercises like penetration testing, phishing simulations, and Cyber Awareness Months. But these often remain surfacelevel focusing on compliance rather than deep behavioral change.

Humans as the Real “Soft Targets”

To understand this dynamic, consider the analogy of encryption. The algorithm represents technology, while its implementation represents the human factor. Even the strongest encryption is vulnerable if implemented poorly. Similarly, even the best security architecture can fail through human error.

Research consistently shows that the majority of data breaches stem from human mistakes. IBM’s 80/20 finding that 8% of users cause 80% of issues illustrates this concentration of risk. Other studies show that roughly half of all employees want to act securely but simply don’t know how.

This gap between intent and behavior is both the challenge and opportunity for cybersecurity.

Behavioral Levers and Emotional Design

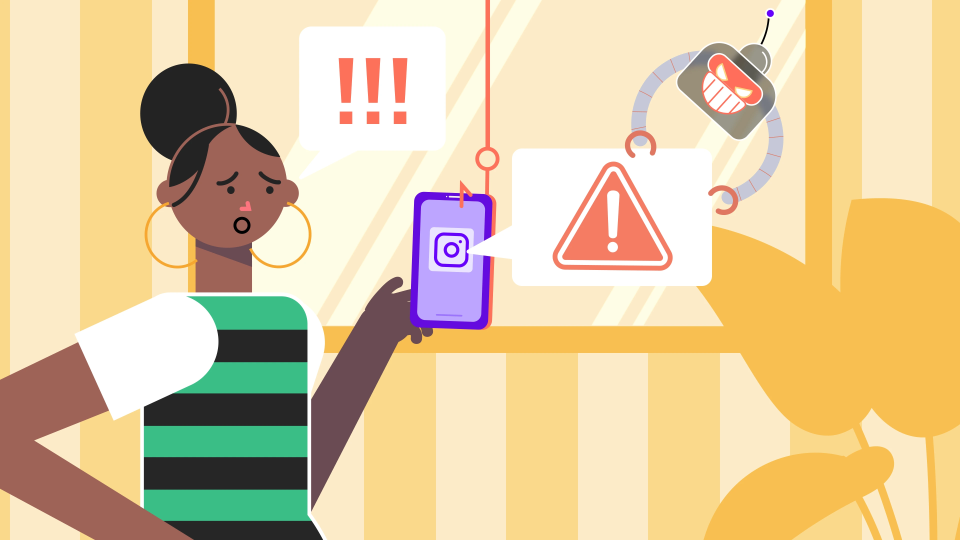

Human behavior can be influenced - ethically and positively - using methods long proven in fields like marketing, design, and therapy. Casinos, for instance, master sensory influence through lighting, sound, and layout to guide customer behavior. The same psychological principles can be used for good: designing systems that support user resilience and reduce emotional vulnerability.

For example, emotional states heavily influence decision-making in phishing scenarios. Most people who fail phishing tests vividly recall the moment they clicked the link - knowing even then that it was the wrong choice. They justify the lapse with stress, distraction, or urgency.

This emotional awareness can be leveraged for learning. Imagine AI-driven chat agents following up minutes after a phishing test failure, guiding users through reflection and gentle reinforcement. These post-event “nudges” could improve retention and empathy far better than traditional reprimands.

Managing Stress and Cognitive Load

Monitoring digital activity patterns can also reveal stress indicators. Elevated stress correlates with impulsive behavior, increasing the risk of security mistakes. By recognizing high-stress moments - such as when a user is multitasking intensely across chats, emails, and browsers - systems could temporarily slow external communications or suppress non-critical notifications, reducing exposure to phishing attempts.

This isn’t about surveillance but context-aware protection. GenAI models can help design such adaptive systems, nudging users into calmer, more focused states - measurable through reduced incident rates.

Conclusion

Tools alone can’t secure an organization; people can. By understanding behavior, stress, and motivation, we can design security cultures that reinforce awareness instead of fatigue. Human-centric cybersecurity recognizes that resilience begins not in code or policy, but in emotion and cognition - the way people think, feel, and act under pressure.