GenAI and the Illusion of Control: Why Enterprise Software Is Quietly Undermining Data Security

Jan 20

Get in touch with our HRM Specialists

Introduction

Paying millions of dollars on security systems while leaving the barn door open through Enterprise Software GenAI features is becoming an increasingly common pattern.

Nothing much has changed over the last decades. Security and data protection are still not being taken seriously enough and are often treated as a complicating nuance-something to be worked around as long as there are no immediate legal implications.

This becomes particularly visible in the way GenAI integrations are being rushed into enterprise software environments in the name of cost reduction and “future-readiness,” often without answering basic questions of control and accountability.

We can illustrate this by two simple but fundamental cases about GenAI integrations that almost every company is currently pursuing. Not being able to answer either and not being able to demonstrate control in conclusion means that fundamental protection of data and intellectual property is severely neglected.

Scenario: Enterprise HR Software with GenAI Integration

Consider an enterprise service software integration for Human Resources (HR). In this scenario, the HR system uses a GenAI feature to support team managers in creating reports across their teams’ compensation: for example, reports showing average and median salaries or bonuses for individuals within a team.

These reports must be calculated using data that is already available and accessible within the HR system. Typically, such a system is controlled by an Identity and Access Management (IAM) process in which each line manager has only access to data pertaining to their role, in this case, to compensation data across the teams they managed, not somebody else’s team outside their own team structure.

This is a baseline security expectation in enterprise systems.

Case 1: The GenAI Already “Knows” the Answer

In the first case, the HR software’s GenAI already knows the answer because it has been trained or fine-tuned on the underlying raw data set. It therefore has intrinsic knowledge that allows it to generate responses immediately.

The key question is:

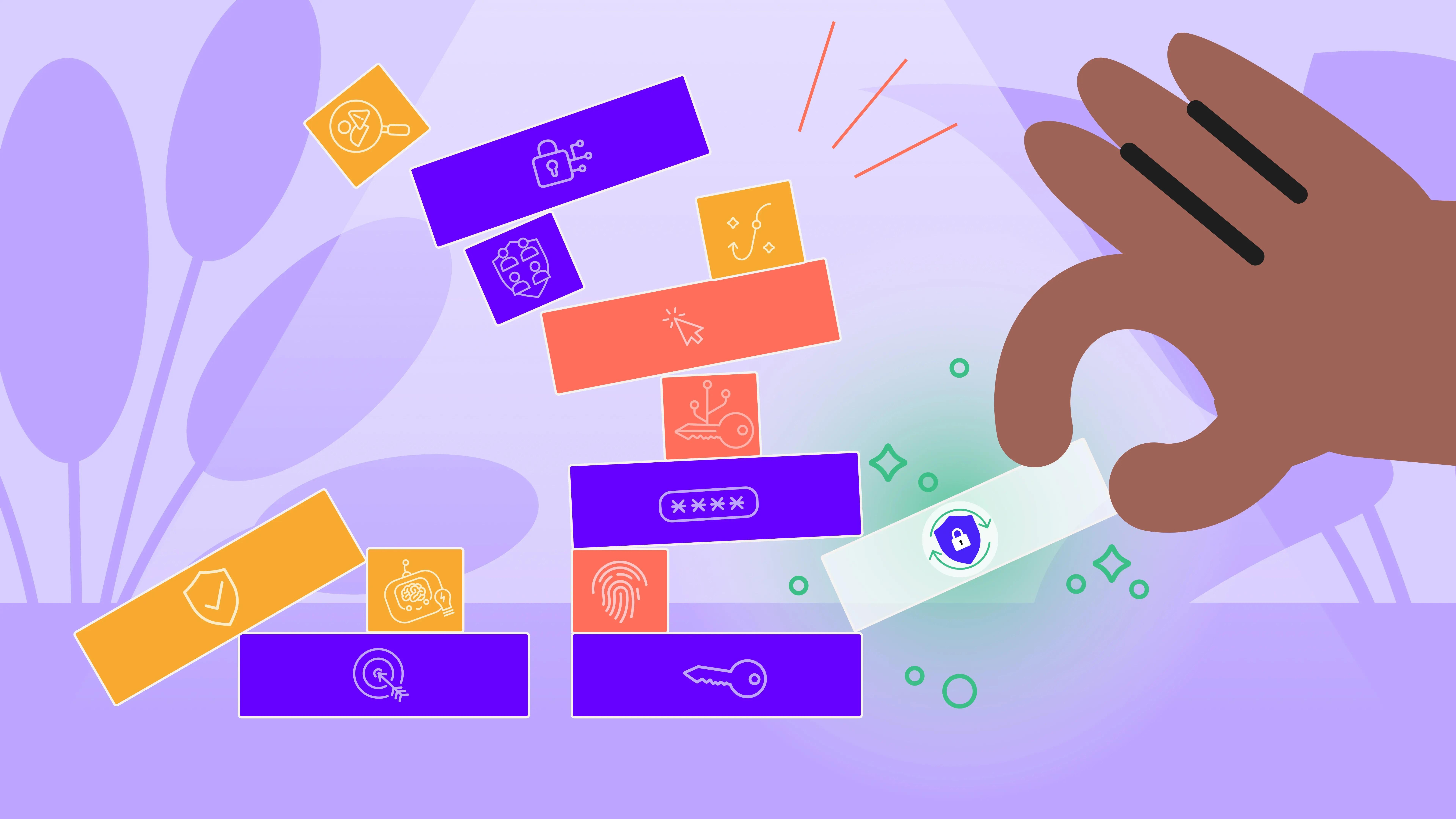

How is the GenAI governed and controlled to ensure it follows the required Role-Based Access Control model? Specifically, how does the system assure that sensitive compensation information is only provided to authorized individuals-or authorized non-human entities acting on their behalf?

Case 2: The GenAI Actively Retrieves the Data

In the second case, the HR software’s GenAI does not yet know the answer. Instead, it follows a reasoning process: identifying relevant data sources, accessing the data, and calculating results in response to the user’s request.

This raises a different but equally critical set of questions:

How is the GenAI orchestrated to select and use exactly the role that matches the requester’s access privileges?

If the GenAI is operating with a broad or all-access system role, how is access restricted to ensure that only authorized data is queried and processed? For example, does the GenAI explicitly inherit and enforce the requester’s RBAC permissions throughout the entire reasoning process?

Why This Matters

Fundamentally, one of these two cases must be true-even in hybrid implementations that introduce additional layers between the request and the response. If an organization cannot clearly explain which case applies, and how access control is enforced, then it is not in control of its sensitive data.

In the context of HR systems, this lack of control almost certainly implies exposure to legal and regulatory risk. More broadly, it represents a failure of basic data governance at a time when GenAI systems are being granted unprecedented reach into enterprise data.

Regardless of the specific technical shortcomings or downstream consequences, the underlying issue is the same: a lack of due diligence and a troubling neglect of responsibility for protecting material data. If these questions are not being asked, if vendor responses are not being challenged, and if controls are not being validated as effective, then organizations are operating on an assumption of security rather than evidence of it.

In short, if you cannot clearly answer these questions, you do not have control-no matter how much you are spending on security tooling.