It’s Time to Make Peace With Imperfection in Cybersecurity Human Risk Management

Jun 27

Experience OutThink

I’m often asked, ‘What’s the difference between human risk management and traditional security awareness? Isn’t it just the same thing in different clothes?’ It’s a fair question.

My response? Human risk management is the industry finally letting go of the falling rock.

That rock is the illusion of perfection - the belief that with enough control, clean metrics, and precision, we could master the chaos of human behavior. We held on tightly to our 0% phishing click rate goals, or a single “risk score” for the entire workforce, thinking it will steady us. We demand dashboards with normalized data and KPIs. We desperately want to feelin control.

But when it comes to people, that mindset holds us back.

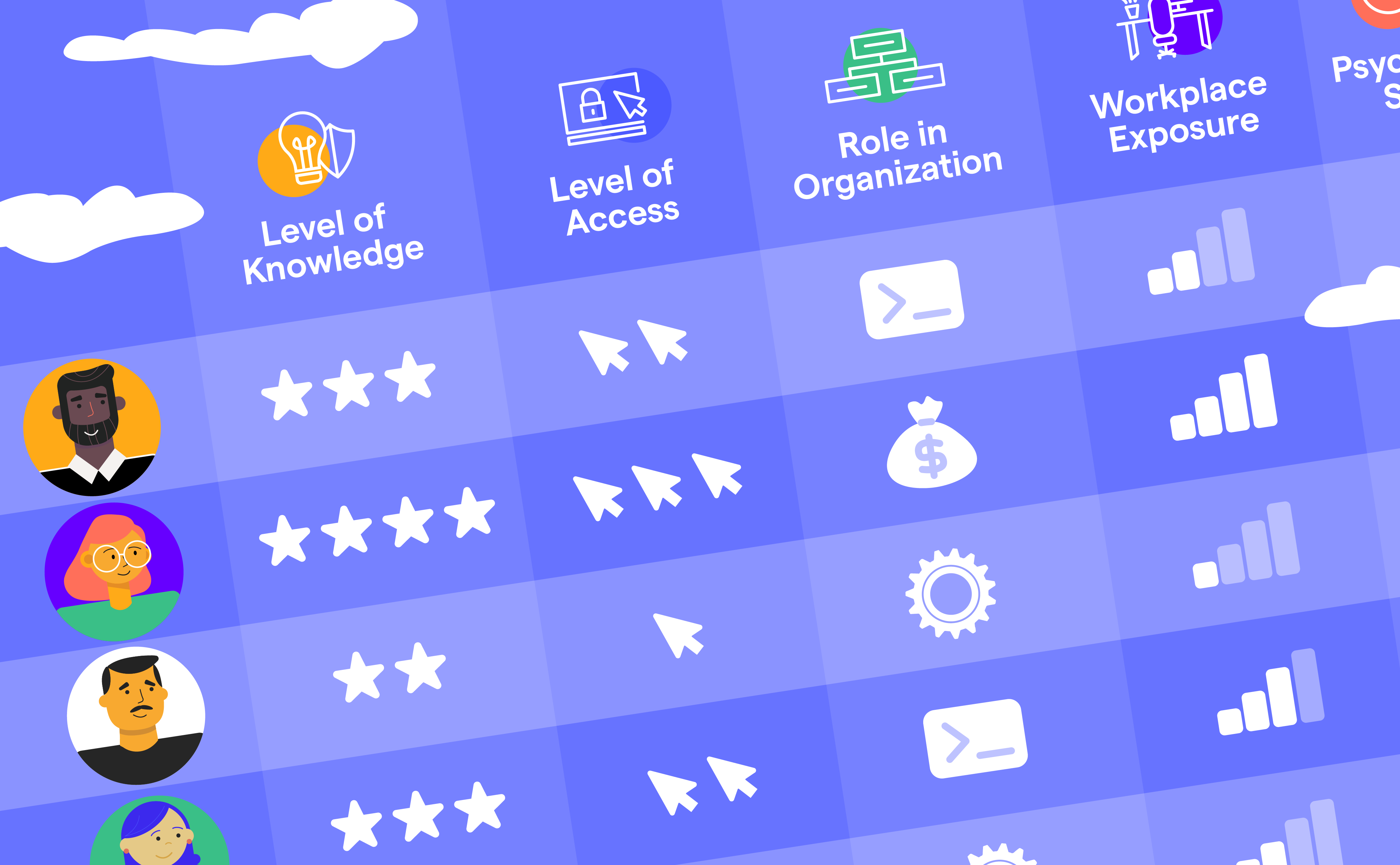

Perfect metrics are not just unrealistic, they’re misleading. Boiling complex human behavior down to a single number might look clinical, but it hides what really matters: patterns, context, change over time, and resilience. The pursuit of ‘perfect’ numbers can actually distract us from progress. Clinging to that illusion of control doesn’t make things worse per se, but it doesn’t change the fact that we’re still failing. Complexity, unpredictability, and messy human data are part of the terrain. The question isn’t whether we can stop the fall, it’s whether we can learn to navigate it.

Letting go doesn’t mean giving up on measurement or structure. It means trading a false sense of control for meaningful, adaptable insight. Because in cybersecurity human risk management, control doesn’t come from precision but from adaptability.

And to me, that’s the heart of it: human risk management is letting go of the rock.

The Fallacy of Perfect Measurements

When the U.S. Air Force was designing cockpits to fit the ‘average’ pilot across 10 body measurements in the 1950s, it ironically found that not a single pilot actually fit all 10 averages. As a result, the one-size-fits-all cockpit fit no one well and redesigns had to be based on ranges and flexibility instead. The most commonly used and ‘statistically accurate’ measure is the average. But designing interventions for the average person is often a bad idea because very few people actually sit at the average.

The same applies in cybersecurity training or human risk management interventions: targeting the ‘average user’ risks ignoring those who are most vulnerable or disengaged. For instance, a phishing simulation tailored to a statistically average response time may completely overlook users who are impulsive under pressure or those who process information more slowly. Instead of designing for a fictional middle, we must design with variance and adaptability in mind: focusing on behaviors, patterns, and edge cases that matter.

Unlike technical systems, human behavior doesn’t follow fixed rules. It varies with mood, workload, environment, and a dozen other unseen factors. Yet we often try to impose the same measurement expectations we use for machines onto people.

The Murkiness of Behavioral Metrics

Human-related data is often imprecise, scattered across systems, and shaped by context: think engagement rates, sentiment analysis, security culture surveys, or behavioral logs from phishing simulations. None of these offer a perfect picture on their own, but together they form a narrative. For example, if phishing simulations show that a certain team clicks more frequently in the late afternoon, that's a useful insight, even if it's not statistically perfect. It gives you a basis to start a conversation, target training, or investigate root causes.

The goal isn’t perfect measurement, it’s progress. Directionally useful insights help us ask better questions, focus our efforts, and improve outcomes. It’s not the number itself that matters, but what it tells you - and that often requires context. Being data-driven is good. Being information-driven is better. Behavioral improvement starts with action. Waiting for flawless metrics only delays meaningful progress.

Human Risk Management Is About Resilience, Not Elimination

No amount of training or tooling will reduce human error to zero.

But that's not the goal, nor should it be.

The real objective is to build resilient behaviors: people who report incidents quickly, recover from mistakes, and feel empowered to act securely. Metrics like redemption (users reporting a phish after clicking) or time-to-detect are signs of strength, not failure.

The shift from prevention to resilience requires a mindset change. That evolution acknowledges that human behavior is inherently variable and builds systems that support people when things go wrong. We need to stop trying to fit people in pre-fabricated boxes and start building boxes around people instead.

The Cost of Chasing Perfection

Trying to develop a ‘perfect’ set of human risk metrics can slow your program down. You can end up over-engineering solutions, missing the chance to iterate, or more commonly in my experience, over-measuring or getting distracted. If you are measuring everything, you aren’t measuring anything. And if you’re measuring only things that can be quantified and normalized, you are almost certainly missing the bigger picture.

Worse, this pursuit can isolate your team. Overly rigid standards can create barriers with stakeholders like HR, legal, or business units. When you accept that measurement will evolve, you're more open to collaboration and shared ownership of human risk.

Which, ultimately, is necessary.

Cybersecurity is everyone’s business. Accepting imperfection will break down silos. When you stop trying to control every variable, you start inviting other departments into the conversation. HR might not have your level of security knowledge, but they understand employee sentiment and engagement. Training teams know what resonates with learners.

Bringing these perspectives in helps shape a more accurate and actionable picture of cybersecurity human risk.

Iteration Over Perfection

Cybersecurity human risk management isn’t about eliminating risk, it’s about understanding, influencing, and improving how people behave in complex, high-stakes environments. It should be agile. Start with the data you have, define working models, and iterate as you learn. Your metrics need to be consistent, explainable, and relevant to your context. With time, you can refine them, validate them, and build a stronger foundation for decision-making.

By making peace with imperfection, we’re not lowering the bar but aligning it with reality. And in my opinion, that’s truly the core of human risk management. When we let go of the need for perfect measurement, of the desire for 0% risk, of generalized training based on the non-existent “average”, we open the door to real progress, faster iteration, and better collaboration.

Human behavior will never be as tidy as a server log. But it's just as measurable and extremely influential. Start where you are, use what you have, and build from there. The path to stronger security lies in progression, not perfection.